Test Starters

In this section, you will find a guide on how to create and execute test cases for your Starters.

Overview

What are Starter tests

Starter tests enable you to create unit tests for your Starters. The tests ensure that the Starters will behave as expected.

When you use the stk create starter command to create a Starter, you will find a test folder structure inside the generated files. This structure contains the unit tests.

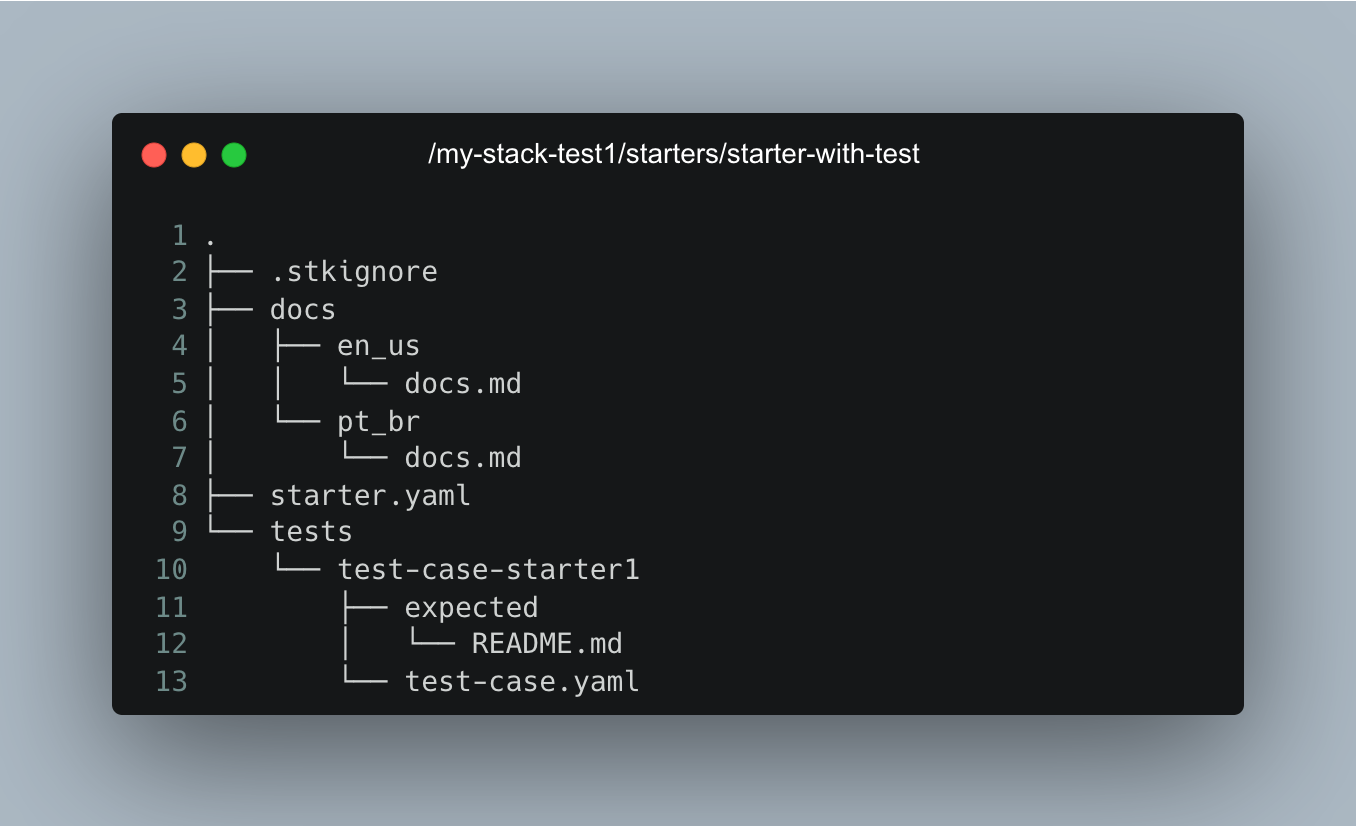

The folder structure starts with a directory called 'tests' and other files. See below:

Folders used in tests

Below you will find details about each folder and its objectives:

Tests/ folder

Inside Starter, a "tests" folder contains test cases related to Starter. Inside each test case, you will find the expected folder. Check it out below:

expected/

Each test case has a "expected" folder that must contain all the files necessary for your Application or Infrastructure, such as project files and source codes. For example, if your Starter includes Plugins that add a Java application or Infrastructure implementation, the "expected" folder should contain the Java project files with all relevant implementations.

The Application and Infrastructure configuration files generated when you create an Application or Infrastructure don't need to be added to the expected folder.

Test case files

Each test case has a manifest in .yaml format called test-case.yaml, which provides information for testing your Starter.

See below the test-case.yaml of a Starter:

schema-version: v2

kind: test

metadata:

name: my-app

description: my-description

spec:

type: starter

plugins:

- name: studio-slug/plugin-name

alias: example-plugin-alias

inputs: {}

- name: studio2-slug/plugin2-name

alias: example2-plugin-alias

inputs: {}

Fields from the test-case.yaml file

metadata:

Refers to the information required when creating an application or infrastructure. It consists of two fields:

-

name: It represents the name of the Application or Infrastructure you want to create.

-

description: This is an optional field where you can briefly describe the Application or Infrastructure.

spec:

-

type: This field always indicates the kind of manifest, which is "starter".

-

plugins: This is a list of required and optional Plugins for the Starter you intend to test. Each Plugin in the list has the following fields:

-

name: The Plugin name and origin are the same as the Starter. The origin is the Studio slug name. The syntax is:

studio-slug/plugin-name.-

alias: The alias name is used when applying the Plugin.

-

inputs: The name and value of each input of the Plugin.

-

-

connections: The list of Connections Interfaces required or generated by the Plugin.

Creating test cases

Before you begin

You must manually create the following structure and populate test case files for existing Starters that do not have test cases. See below:

Inside your Stack, in the starters folder:

- Create the tests folder;

- Inside the tests folder, create the folder with the name of your test case, for example, "test-case-001";

- Inside your test case:

- Create the file

test-case.yamland paste the contents below into your test file:

schema-version: v1

kind: test

metadata:

name: my-app

description: my-description

spec:

type: starter

plugins:

- name: studio-slug/plugin-name

alias: example-plugin-alias

inputs: {}

- name: studio2-slug/plugin2-name

alias: example2-plugin-alias

inputs: {}

- Create the expected folder.

Fill in the Starter test

Step 1. Add the Application or Infrastructure files to the test case

In the '/expected' folder: Add a copy of all your Application or Infrastructure files to this folder, except the app-spec.yaml and infra-spec.yaml files.

Step 2. Fill in the test-case.yaml file

In the test-case.yaml file of your test, check the data and fill in the inputs for your test according to your Starter Plugins.

Fill in the inputs

Consider a Plugin of your Starter with the following inputs:

- The example below highlights the

name:fields of the inputs that you should add to the test case;

spec:

# .

# .

# . Other spec fields above...

inputs:

- label: Database name

name: database_name

type: text

required: true

The completed test file should look like one of the examples below, where the syntax of the inputs should be:

- inputs

inputs:

input_name: "input value"

Example:

schema-version: v1

kind: test

metadata:

name: my-app

description: my-description

spec:

type: starter

plugins:

- name: studio-slug/plugin-name

alias: example-plugin-alias

inputs:

database_name: "The name provided for the database_name input"

Fill in required and generated Connections

If the Plugin in your Starter generates or requires Connections, you must add them to the test case. Consider the examples below:

Plugin that requires a Connection

schema-version: v4

kind: plugin

metadata:

name: plugin-infra-req-s3

display-name: plugin-infra-req-s3

description: Plugin that requires S3

version: 0.0.1

picture: plugin.png

spec:

type: infra

compatibility:

- python

docs: #optional

pt-br: docs/pt-br/docs.md

en-us: docs/en-us/docs.md

single-use: False

runtime:

environment:

- terraform-1-4

- aws-cli-2

- git-2

repository: https://github.com

technologies: # Ref: https://docs.stackspot.com/create-use/create-content/yaml-files/plugin-yaml/#technologies-1

- Api

inputs:

- label: Connection interface for your aws-s3-req-alias

name: aws-s3-req-alias

type: required-connection

connection-interface-type: aws-s3-conn

stk-projects-only: false

Plugin that generates a Connection

schema-version: v4

kind: plugin

metadata:

name: plugin-infra-gen-s3

display-name: plugin-infra-gen-s3

description: Plugin to generate S3

version: 0.0.1

picture: plugin.png

spec:

type: infra

compatibility:

- python

docs: #optional

pt-br: docs/pt-br/docs.md

en-us: docs/en-us/docs.md

single-use: False

runtime:

environment:

- terraform-1-4

- aws-cli-2

- git-2

technologies: # Ref: https://docs.stackspot.com/create-use/create-content/yaml-files/plugin-yaml/#technologies-1

- Api

stk-projects-only: false

inputs:

- label: Inform your connection for bucket_s3

name: bucket_s3

type: generated-connection

connection-interface-type: aws-s3-conn

outputs:

- from: aws-s3-alias-arn

to: arn

- from: aws-s3-alias-bucket_name

to: bucket_name

For the test case, follow the syntax of the examples below:

Test case with required connection

schema-version: v2

kind: test

metadata:

name: my-app

description: my-description

spec:

type: starter

plugins:

- name: studio-slug/plugin-name

alias: example-plugin-alias

inputs:

database_name: "The name provided for the database_name input"

aws-s3-req-alias:

selected: my-s3-req

outputs:

arn: "my-s3-arn"

bucket_name: "my-bucket-name"

Test case with generated connection

schema-version: v2

kind: test

metadata:

name: my-app

description: my-description

spec:

type: starter

plugins:

- name: studio-slug/plugin-name

alias: example-plugin-alias

inputs:

database_name: "The name provided for the database_name input"

bucket_s3:

selected: my-s3-req

outputs:

arn: "aws-s3-alias-arn"

bucket_name: "aws-s3-alias-bucket_name"

Run Starter tests

You have to run the tests inside your Starter's folder. To run the tests, access your Stack and then "starters" folder and execute the commands below according to the tests you want to run:

Run all Starter tests

Run the command:

stk test starter

Run a specific Starter test

Run the test command with the name of the test folder:

stk test starter [test-case-name]

Example:

stk test starter test-case-connection-gen001

Running tests with the '--verbose' filter

You can use the --verbose option to display detailed test results.

Examples:

stk test starter --verbose

stk test starter test-case-connection-gen001 --verbose

Error scenarios and ignored tests

It is crucial to quickly identify errors found in your Starters during test case execution. Please review the mapped errors and scenarios below to adjust your Starters accordingly.

Errors mapped in Starter:

- Code:

STK_MESSAGE_BUSINESS_EXCEPTION_FOLDER_HAS_NO_TESTS_FOLDER_ERROR - Code:

STK_MESSAGE_BUSINESS_EXCEPTION_FOLDER_HAS_NO_TESTS_FOUND_ERROR - Code:

STK_MESSAGE_BUSINESS_EXCEPTION_TEST_STARTER_INVALID_STARTER_MANIFEST_ERROR - Code:

STK_MESSAGE_BUSINESS_EXCEPTION_TEST_STARTER_STACK_NOT_FOUND_ERROR - Code:

STK_MESSAGE_BUSINESS_EXCEPTION_TEST_STARTER_INVALID_STACK_MANIFEST_ERROR - Code:

STK_MESSAGE_BUSINESS_EXCEPTION_MISSING_REQUIRED_INPUTS_ERROR - Code:

STK_MESSAGE_BUSINESS_EXCEPTION_TEST_STARTER_NO_TESTS_FOUND_WITH_TEST_NAME_ERROR

Scenario: When you did not find the tests folder;

Scenario: When you did not find test cases in the tests folder;

Scenario: When it was not possible to convert the Starter manifest (starter.yaml), as it was invalid;

Scenario:When the Stack manifest (stack.yaml) was not found in the Starter root directories;

Scenario: When it was not possible to convert the Stack manifest (stack.yaml), as it was invalid;

Scenario: When the required inputs are not included in the test case manifest (test-case.yaml);

Scenario: When no test case with the desired name was found;

Skipped tests:

- When the expected folder does not exist in the test case directory;

- When the expected folder is empty;

- When the test case manifest (test-case.yaml) is invalid;

- When the test case manifest (test-case.yaml) could not be converted.

Test case failure when creating an Application or Infrastructure with Starter:

- When the required Plugins are not included in the test case manifest (test-case.yaml);

- When the Plugin(s) were declared in the test case manifest, but are not part of the Stack (stack.yaml);

- When the required inputs are not included in the test case manifest (test-case.yaml);

- When the input(s) do not follow the pattern (regex) defined in the input;

- When the required Connections are not declared in the test case manifest;

- When an unexpected error occurs during the creation of the app/infra;

Test case failure when validating generated files:

- When Starter generated an unexpected file;

- When Starter generated an unexpected folder;

- When Starter did not generate the expected folder;

- When Starter did not generate the expected file;

- When an unexpected failure occurs during validation of generated files.

Test case failure to validate content of generated files:

- When Starter generated a line with different content than expected;

- When Starter generated fewer lines than expected;

- When Starter generates more lines than expected.